All Posts

Hume AI Review & Alternatives [2025]

Key Takeaways:

Emotion recognition is central to designing personalized experiences, but it can be difficult to offer personalized and empathetic interactions to every user as organizations grow. Fortunately, AI solutions can read and respond to human emotion, making it easier to deliver a personalized experience at scale.

Hume AI is a popular emotion recognition platform, but is it the best? Since emotion recognition technology provides insights you can use for other applications, such as personalized video content or real-time customer support, some other tools may offer more of the tools you need based on your use case.

In this article, we review Hume AI and explore a few alternatives.

Hume AI is an AI platform designed to analyze human emotion through voice, facial expressions, and text. For example, Hume AI can analyze a customer’s tone of voice during a support call or detect emotional nuances in text feedback to provide actionable insights.

Its emotion recognition algorithms interpret subtle cues, which makes the platform useful for various applications in customer experience, mental health, and more.

Hume AI is used across industries, including customer service, healthcare, and market research. It offers some useful emotion recognition tools, but its integration and scalability might present challenges if you’re a small business or lack a technical team.

Hume’s AI algorithms use voice, video, and text data to train themselves. They analyze tone, pitch, speed, and pauses in audio and emotional indicators like smiling, frowning, and eyebrow movements in video. The model then synthesizes these insights into a comprehensive emotional profile, allowing Hume AI to detect a range of emotions, from joy and frustration to anxiety and sadness.

The results are turned into actionable data that can inform strategies in other areas. For example, a customer service team might receive real-time insights to adjust their tone of voice or approach based on the customer's emotional state.

Hume AI has a few key features, including:

Hume AI has applications across several industries, including:

Hume AI offers some benefits, but it has some drawbacks to consider before buying. Let’s dive into the pros and cons of Hume AI.

There are several other tools with emotion recognition technology that might be a better fit based on your needs.

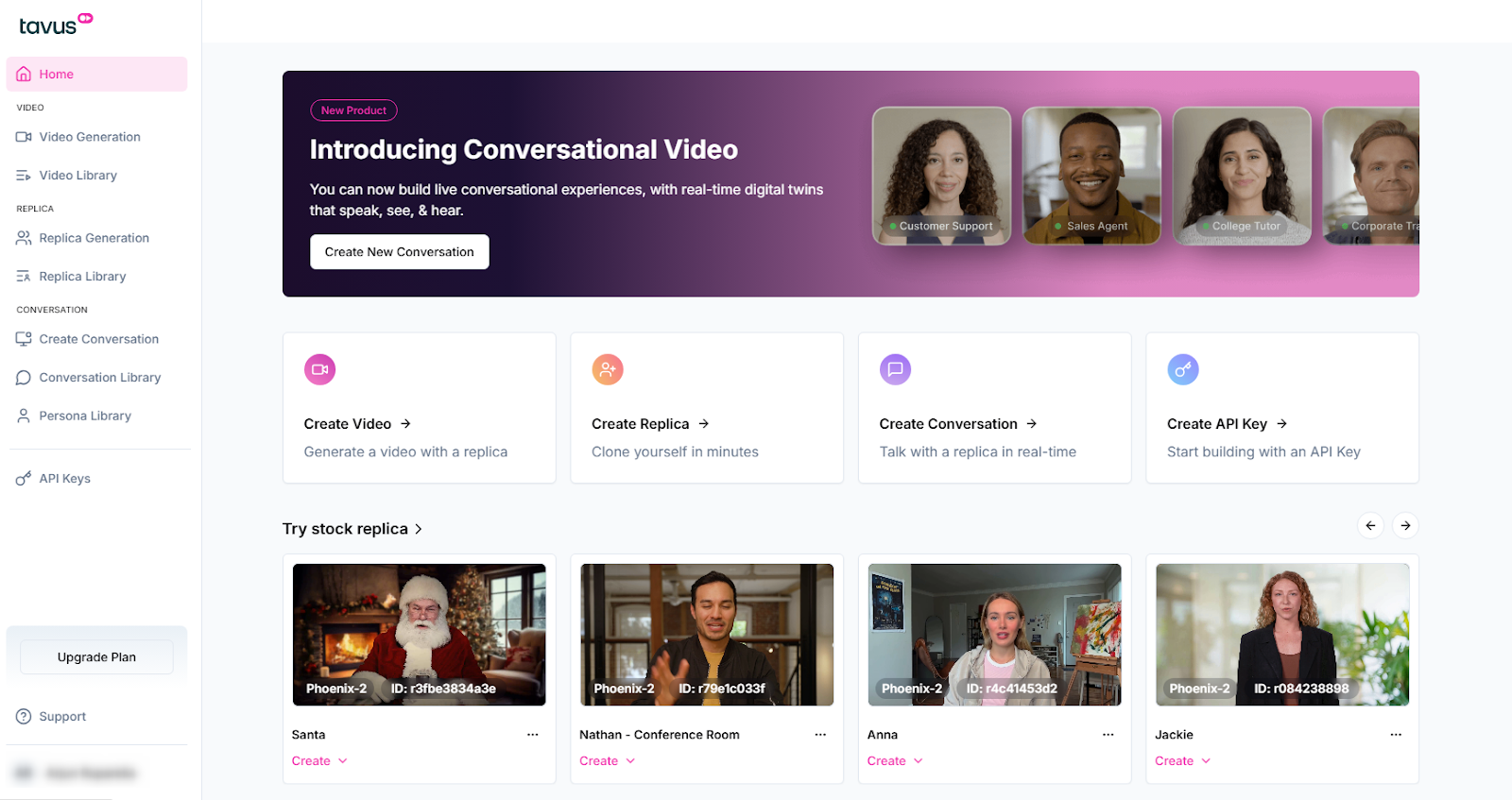

Tavus is a research lab pioneering human computing. For developers, its Conversational Video Interface (CVI) API lets you embed real-time, face-to-face AI humans in your product—systems that see, hear, and respond with emotional intelligence. Powered by in-house human simulation models, Tavus reads tone, expressions, and context to adapt on the fly. You can also generate high-quality videos for asynchronous use cases, all from the same platform.

Features:

Pricing:

If your goal is to let customers talk face‑to‑face with AI humans in your app—and generate emotionally aware videos—Tavus is your best bet.

Integrate Tavus into your tech stack today.

Speechmatics is an automatic speech recognition (ASR) platform that also incorporates sentiment and tone analysis features. This allows Speechmatics to recognize emotions to some degree.Speechmatics can identify linguistic features and tone of voice that hint at underlying emotions. However, it doesn’t combine facial or contextual text analysis to provide a full-spectrum emotional profile.

Features:

Pricing:

Replika is an AI chatbot that recognizes, interprets, and responds to user emotions during text-based conversations. Developers can integrate it for various applications. For example, Replika can create interactive training simulations that teach employees empathy and emotional intelligence.

Replika focuses solely on text-based interactions and sentiment analysis. It can’t analyze voice tone, facial expressions, or other non-verbal emotional cues. It’s also not capable of recognizing emotions in real time, which is critical in industries like customer support, healthcare, and gaming.

Features:

Pricing: Replika offers three pricing tiers:

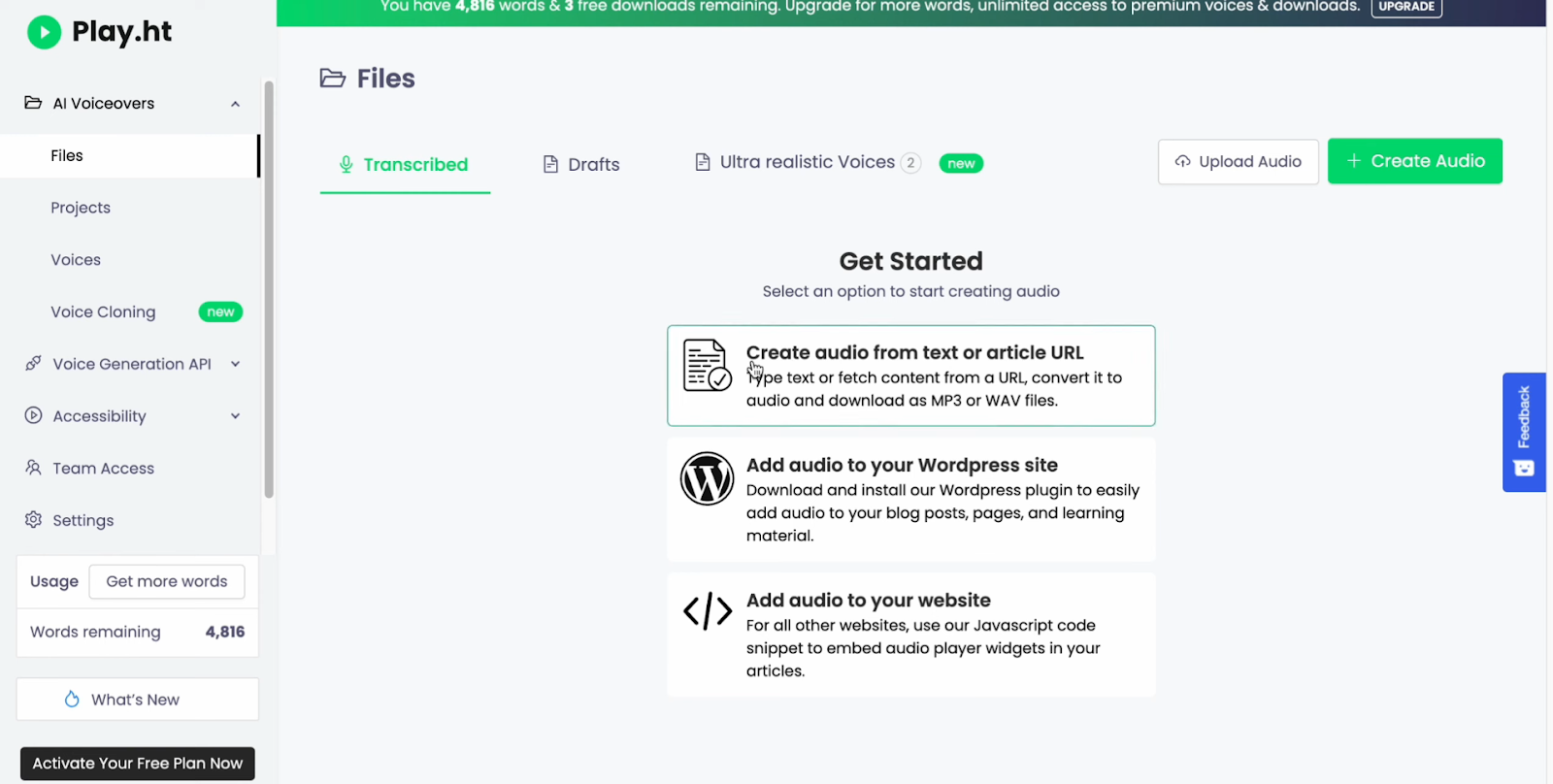

Play.ht is an AI voice generation platform that specializes in creating human-like speech from text. It doesn’t directly offer emotion recognition capabilities, but it generates speech that conveys a range of emotions and speaking styles.

The platform’s AI models, such as PlayHT2.0, are trained to understand and apply various emotions and speaking styles to any voice in real-time. This means Play.ht is designed to express emotions, not recognize them by analyzing user input. For this reason, Play.ht has a narrow use case—it’s suitable if you’re looking for an audio content creation tool that also adds emotion to the audio.

Features:

Pricing:

AssemblyAI is an AI-driven speech recognition and audio analysis solution. It has a sentiment analysis feature for spoken audio, classifying it as positive, negative, or neutral. This is done by analyzing the transcribed text of the audio and assigning a sentiment label to each sentence along with a confidence score.

However, AssemblyAI doesn't offer multimodal emotion recognition. It focuses on audio data and doesn’t integrate visual or facial emotion analysis. This is why it’s best suited for transcription-heavy use cases such as call center analytics, media transcription, or sentiment tracking in recorded content.

Features:

Pricing:

Here are answers to some commonly asked questions about Hume AI.

Dr. Alan Cowen is the CEO of Hume AI. He’s a cognitive scientist and researcher specializing in the science of emotions, with extensive work on mapping and understanding the complexity of human emotional expression across different modalities like voice, facial expressions, and language.

There’s no definitive “first” emotional AI, but one of the first milestones in the development of emotional intelligence was ELIZA. It was developed by Joseph Weizenbaum back in the 1960s to explore the ability of computers to mimic human communication. While ELIZA didn’t understand emotions, it created an illusion of empathy through simple pattern matching.

Hume AI’s Empathatic Voice Interface (EVI) is a new AI with emotional intelligence. It’s designed to read and respond to human emotions by analyzing tone and other subtle cues in speech.

While Hume AI does analyze emotions, it doesn’t help you apply that information. For example, if you’re looking to allow end-users to apply this technology to personalized video generation, Tavus is a top alternative. Tavus enables real-time, face-to-face AI humans through its Conversational Video Interface, powered by models that perceive emotion and respond naturally.

At the end of the day, emotion recognition isn’t just about recognizing feelings. It’s about transforming those insights into actions that inspire genuine human connection. While Hume AI is effective when it comes to recognizing emotions, it has some limitations in certain use cases.

For example, if you’re a developer who wants to add capabilities to your app like lip-syncing or dynamic video creation as well as emotion recognition, Hume can’t help you apply emotion recognition to those use cases. That’s where an alternative like Tavus is perfect.

Tavus gives you a real-time Conversational Video Interface to build AI humans that see, hear, and respond like people—plus high‑quality video generation when you need it. It supports 30+ languages, fast RAG-enabled Knowledge Bases, persistent Memories, function calling, and developer‑friendly, white‑labeled APIs.